Engaging labs and peer-marking to improve practical data skills in Econometrics

Ştefania Simion

School of Economics, University of Bristol

Published May 2022

1. Introduction

Econometrics 1 is a unit for around 350 single honours Economics students in their second year of undergraduate study at the University of Bristol. It has both a theoretical and an applied focus, with topics ranging from descriptive analysis using the OLS estimator to causal analysis, introducing RCTs, IVs, DiDs and RDDs. Given the importance of data handling skills emphasised by employers[1] and the fact that some of our third-year units (e.g. applied dissertations) require data analysis skills, computer labs ran in STATA were first incorporated in the unit in the academic year 2019/20.

Yet, these sessions were very poorly attended, with students claiming that only one very short STATA exam question was not a strong enough incentive to engage with the materials and develop their practical data skills. Hence, since 2020/21, the unit has been restructured to provide more incentives to gain said skills. Novel asynchronous and synchronous lab materials were introduced, and students had to engage with a set of peer-marked STATA assessments and attempt more STATA questions in the summative assignments.

2. Design of the STATA labs

[Students] were encouraged to discuss with their peers their answers and the tutor was there for support, rather than showing how to answer the questions.

In 2019/20 the only asynchronous materials available were (i) a short video at the beginning of the term, introducing the STATA interface and (ii) a brief list of the main commands covered in the course. Students had to attend fortnightly a one-hour lab and during the session they had to go through a set of short exercises, following a format close to the ones from the main textbook.[2] As students engaged very poorly with the limited asynchronous material, most of the times the labs covered only part of the questions. So the labs were redesigned to be supported by asynchronous materials and to allow students to engage more during the labs, while working with more up-to-date and relevant datasets.

2.1 New design

First, I redesigned the asynchronous material entirely, so that students had to review two materials before each fortnightly lab:

- In a 10–20-minute video I introduced the topic of the following lab session and I guided them in real time, step by step from opening a new dataset in STATA to implementing new commands and interpreting the output. Each video was recorded in a way that allowed me to explain the concepts and show all the steps (from opening STATA until closing the

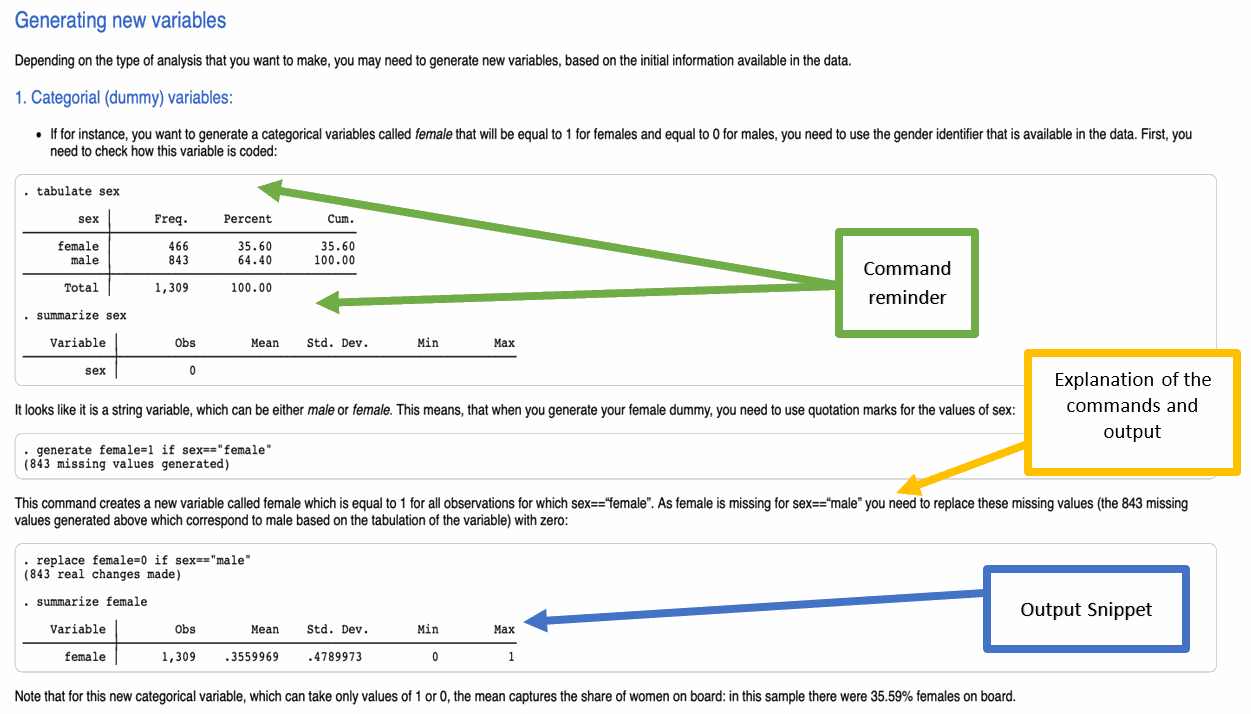

dofile), while also being short enough to keep the students’ attention. I recorded these videos on Zoom, by recording my screen. - Each video was complemented by a task, presented in an HTML format which described in more details the commands introduced in the video. Students had to work with the dataset used in the video and to practise the same commands. The task also provided comprehensive descriptions of the commands and the output given by STATA. I designed this task with Markdown,[3] as it allowed me to write comments and show the STATA output. Figure 1 is an example snapshot of a data cleaning task. Students also referred to this material as a reminder of the commands.

Figure 1: Snapshot of the Data Cleaning Task used in 2021/22 (click to expand)

Second, the synchronous labs were redesigned to let students use commands learned in the asynchronous material, while also introducing a few new ones. For each lab, students had to work with a different dataset from the one seen in the asynchronous material or in previous labs (like a recent version of Airbnb data from rentals in Bristol, a sample of the quarterly UK LFS, a snapshot of COVID-19 data on cases, and deaths etc.). While each student had their own computer, they were encouraged to discuss with their peers their answers and the tutor was there for support, rather than showing how to answer the questions. Revealing the questions and the dataset at the beginning of the sessions helped to homogenise the engagement across students.

The length of the labs was also increased to 2 hours to give more room for discussion and collaborative work and to allow students to cover all the questions during the sessions. Students received the do file with the full solutions at the end of the week.

3. Design of the Assessments

In 2019/20 the unit was assessed by a 100% final exam, which included only one STATA question, worth 5% of the final mark. The assessment for the unit was redesigned to introduce assessments during the term and to incorporate more STATA questions across the summative assessments.

3.1 New Design

First, the final exam worth 100% was replaced by a set of assignments: final exam; MCQs and open-ended question assessments evaluated during the term.

Second, the share of STATA questions in the summative assessments went from 5% in 2019/20, to 16% in 2020/21 and 20% in 2021/22, by including more STATA questions both in the final exam and in the MCQs. While students did not have to run actual code, they had to answer questions regarding the validity/accuracy of some given STATA code and/or interpret output.

Finally, 5 pass/fail short STATA coding peer-marked tasks were introduced. These were released after each STATA lab (fortnightly) and the material was based on the topics covered up to that point in the labs (synchronous and asynchronous). For each task, students received a new dataset and had to answer 5 questions using it (describe the data, clean the data, run some specific regressions or tests, interpret output, propose a regression or test, produce a graph etc.). They had to submit a do file with the code and the interpretation required for the task. Then, once the submissions were done, each student was allocated randomly another’s student anonymised do file and had to provide reflective feedback.

Students were guided to provide at least one piece of constructive feedback on what could be improved and one positive statement on what had been done well. A successful submission was based on a reasonable attempt at both the questions and the review provided to the peer. If they failed either, the attempt was deemed unsuccessful. The students had to show a reasonable attempt in at least 3 out of the 5 tasks; otherwise they failed the unit.

For the peer-marked tasks I used Eduflow, which is very user-friendly both for instructors and students. It permits students to upload/download do files effortlessly and provide feedback directly on the platform. As an instructor, one can easily monitor the submissions at all times. The platform also allowed students to reflect on the received feedback, although this was not something that we monitored as part of the pass-fail tasks.

4. Take Away

The students highly appreciated the new format of the labs, finding the material useful, easy to follow, engaging and interesting. The incentives provided seem to work as we saw a lot of engagement with the peer-marking assignments, with a large share of high-quality submissions of the do files and peer-feedback provided. There was also a considerable increase in the usage of the STATA labs materials (both asynchronous and lab attendance) and an improved performance in STATA questions across all the summative assessments, despite the increased difficulty and number of questions.

The first year of the changes coincided with the pandemic, so it was a bit more challenging to run the labs online, as students often had problems with internet connections or with accessing STATA remotely. The discussion between students (who were split into breakout rooms) was also quite limited. Once we returned to in-person teaching, this was not a problem anymore, with students engaging in discussions during the labs much more. Another big improvement has been that students received individual STATA licences, which allowed them to use their own personal computers during the lab sessions. Two-hour labs, with questions revealed at the beginning of the session, created a very engaging environment.

From a pedagogical point of view, it is very important to carefully consider the instructor time available for monitoring the submissions and the feedback. Monitoring engagement with a fortnightly peer feedback task can be time-consuming. This can be mitigated by creating tasks which can be auto-marked, but this limits the incentive and ability for the student to provide detailed, reflective comments. Another concern with a pass-fail task is that some students may need periodical reminders if they are at a risk of failing the unit, which can add additional administrative work.

Notes

[1] Source: Jenkins, Cloda and Lane, Stuart (2019): “Employability Skills in UK Economics Degrees: Report for the Economics Network”, https://doi.org/10.53593/n3245a

[2] Wooldridge, Jeffrey M. (2019): “Introductory econometrics: a modern approach”, 7th edition, Boston, Cengage. OCLC 1132353739

[3] I found this resource particularly useful to produce the materials in Markdown: https://data.princeton.edu/stata/markdown

↑ Top